@2021 invictIQ is a venture by Sprint Consultancy. All rights reserved. Privacy Policy.

Ethical Puzzles: Navigating AI Grey Areas in Elderly Care

by Sangha Chakravarty

In the corridors of Sunrise of Bassett, lavender oil mingles with the soft chime of an electronic reminder. Mrs. Miggins, a resident with a twinkle in her eye, steps out for her stroll, her smart pendant silently tracking her path. Yet, beneath the veneer of technological support lies a maze of ethical questions, awaiting careful consideration.

The AI Lifeblood

Before we explore Mrs. Miggins’ world with technology, let us understand something important. Data, which is the lifeblood for AI, isn’t just numbers. It is a story made from Mrs. Miggins’ steps, her sleep, and even the looks on her face. The ethical debate isn’t just about ‘what’ data is collected, but also ‘why’ and ‘how,’ and who has control over it.

Think of Mrs. Miggins’ smart pendant not just tracking where she is but also studying her steps, sleep, and facial expressions. While it gives useful information, it raises concerns about her privacy and autonomy. Is Mrs. Miggins fully aware of all the data collected, and does she have a say in this? This delicate balance between protection and transparency, between trust and technology, is the shared language we must learn to speak fluently in the new world of AI.

Building Trust

Before care providers venture into AI powered data mining, building a bridge of trust is paramount. Walking hand-in-hand with residents, friends & families, and staff, open communication, shared decision-making, and ongoing education are trust-building bricks.

Think “Tech Thursdays,” open forums where residents voice their anxieties about smart cameras or data tracking. Or imagine family “Tech Circles,” where grandchildren learn alongside grandparents, dispelling myths and fostering collaboration. By demystifying use of data with AI and opening dialogues, you can pave the way for trust, ensuring AI becomes a welcomed support, not a silent intruder.

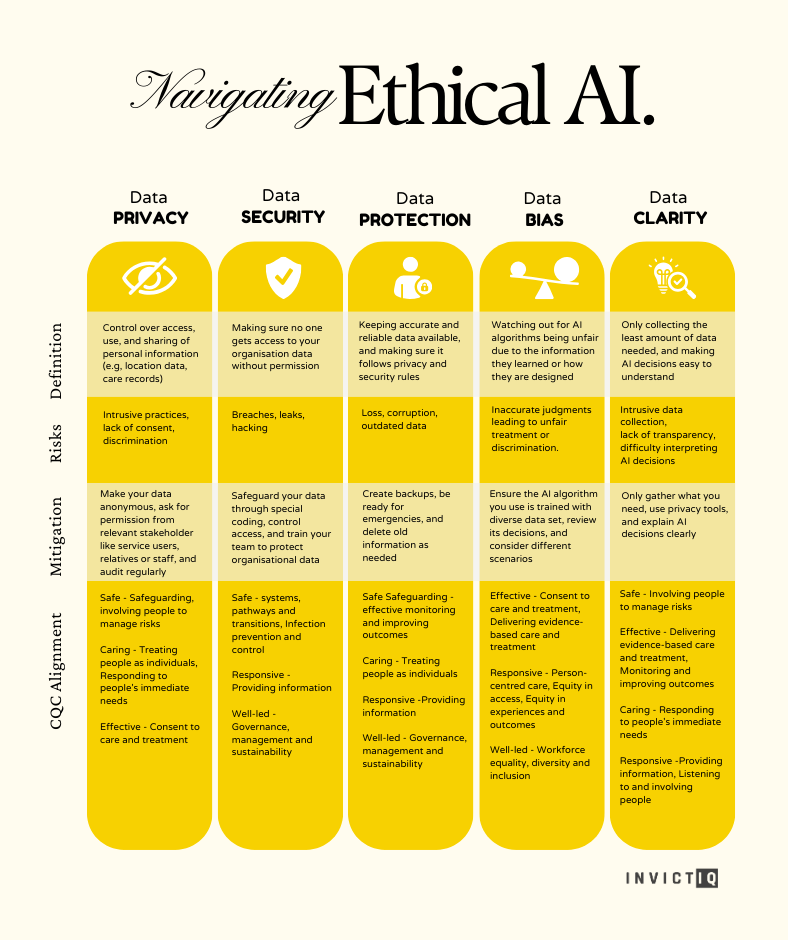

Data‘s Guardians

Mrs. Miggins’ location data, her care records, even the digital echoes of her facial expressions – these are not mere statistics, but fragments of her story, deserving of a fortress.

Data Privacy ensures Mrs. Miggins retains the keys to this fortress, owning and controlling how her narrative unfolds. Data Security is the gatekeeper, protecting her data against unauthorised access, while Data Protection guards against inaccuracies and breaches. Remember, a single data leak can be more than a technical glitch; it can leave Mrs Miggins exposed and her story vulnerable.

But the AI maze can also holds other perils. Imagine Mrs. Miggins’ smart pendant rerouting her on a walk, a seemingly helpful nudge that quietly strips away her autonomy. This balance between protection and individual choice demands a nuanced approach. According to Gartner, over 80% of AI projects fail, often due to poor data quality. Are we prepared for algorithms misinterpreting Mrs. Miggins’ needs, leading her down a path of inaccurate, even harmful care?

Ethical Tools

Navigating the ethical maze of AI in elderly care requires a well-stocked toolbox. For data privacy, the shield of anonymisation can cloak personal details. Imagine Mrs. Miggins’ sleep patterns translated into anonymised graphs, helping caregivers identify potential health issues without revealing the intimate details of her slumber. This is how we navigate the delicate balance between protecting personal data and harnessing its potential for good.

Build trust with Mrs. Miggins and her family through informed consent to understand how their data is used and have a say in its journey. Use regular audits to help you to identify and mitigate risks before they materialise.

For the perils of inaccurate AI predictions where algorithms misinterpreting Mrs. Miggins’ needs, leading down a path of unreliable care, you can combat this, with rigorous testing and real-time feedback mechanisms, constantly correcting the course and ensuring AI recommendations are informed by accurate data.

Finally, even the most sophisticated tools cannot replace the human touch. That is why the “Human-in-the-loop” principle is your north star. It keeps AI recommendations anchored in the wisdom, compassion, and ethical judgment of caregivers. Remember, AI should be a supportive tool, not the main guide, in Mrs. Miggins’ care journey.

Navigating the Maze Confidently

The Care Quality Commission (CQC) provides crucial guidance for service providers on maintaining high standards in handling personal information across all services. Additionally, the Data Security and Protection Toolkit by Digital Social Care, serves as a valuable resource for enhancing data security in the social care sector.

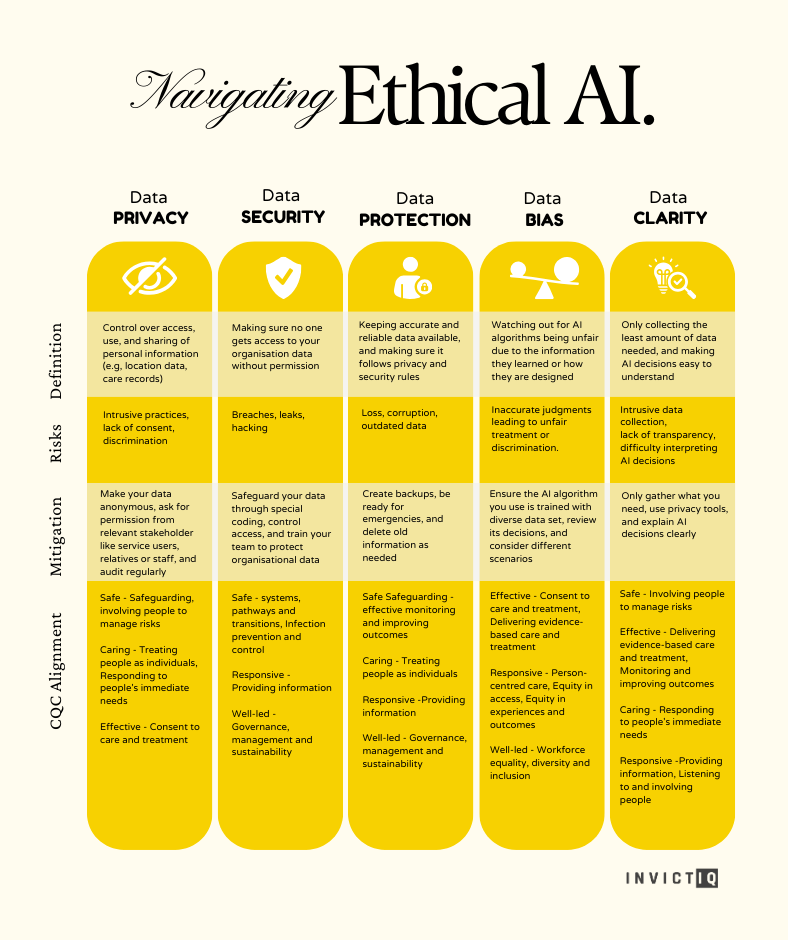

To help you navigate, here’s a handy table

MORE ARTICLES

-

Social Care Audit Remix - Drawing Inspiration from Unexpected Places!

-

Boosting Engagement: The AI Chatbot and Virtual Assistant Advantage Unleashed!

-

Unleashing Data Dynamo : A Game-Changer for Seamless Compliance in Care Provisions

-

Ethical Puzzles: Navigating AI Grey Areas in Elderly Care

-

Audit vs. Inspection: Understanding the Difference for Better Compliance

Navigating audits and compliance can be challenging for care providers. Between paperwork, deadlines, and evolving rules, it is easy to get bogged down in bureaucracy. This distracts from what’s most important: providing exceptional care. InvictIQ offers solutions to simplify this process. Our cloud-based Audit on Cloud streamlines audit workflows so you can access unified meaningful data anywhere. This saves time and frees up valued resources while ensuring transparency.

As you implement AI, InvictIQ helps guide the way with a Data Privacy Audit Tool for Care providers using AI. This comprehensive assessment helps you through AI best practices, ensuring resident data is protected to the highest ethical standards. You can implement technology with peace of mind, knowing it serves residents with both innovation and unwavering respect for their privacy.

With streamlined audits and ethical AI guidance, InvictIQ enables you to focus where it matters most – on delivering exceptional person-centred care to Mrs Miggins.

The Guiding Light

AI is not a magic spell; it is a powerful tool. But in the twilight of Mrs. Miggins’ years, the warmth of a caregiver’s hand should never be eclipsed by the cold glow of a screen. Let’s step into the AI world with thoughtful intention, building a future where AI serves hand-in-hand with compassion.

This journey through the ethical maze of AI in elderly care is complex, but prioritising data quality, building trust, and keeping humanity at the core ensures AI becomes a force for good. Let’s ensure that the well-being of residents like Mrs. Miggins remains paramount, not just a lip-service, but in every interaction, every decision, every moment of their experience.

Sign up for our newsletter